Good morning, {{ first_name | AI enthusiasts }}. AI coding platform Windsurf just sailed into new territory with an in-house model release of its own — launching the SWE-1 family designed to streamline every step of software engineering.

Coming right after the reported $3B acquisition by OpenAI, could the tech powering this launch be the real hidden gem behind the AI leader’s massive purchase?

P.S. — Our next workshop is today at 4 PM EST. Attend and learn how to design, build, and deploy your own AI systems using OpenAI’s Agents SDK. RSVP here.

In today’s AI rundown:

Windsurf’s in-house AI for developers

Poe usage charts AI popularity shifts

Automate Legal Document Analysis with Zapier

Study: LLMs struggle with back-and-forth chats

4 new AI tools & 4 job opportunities

LATEST DEVELOPMENTS

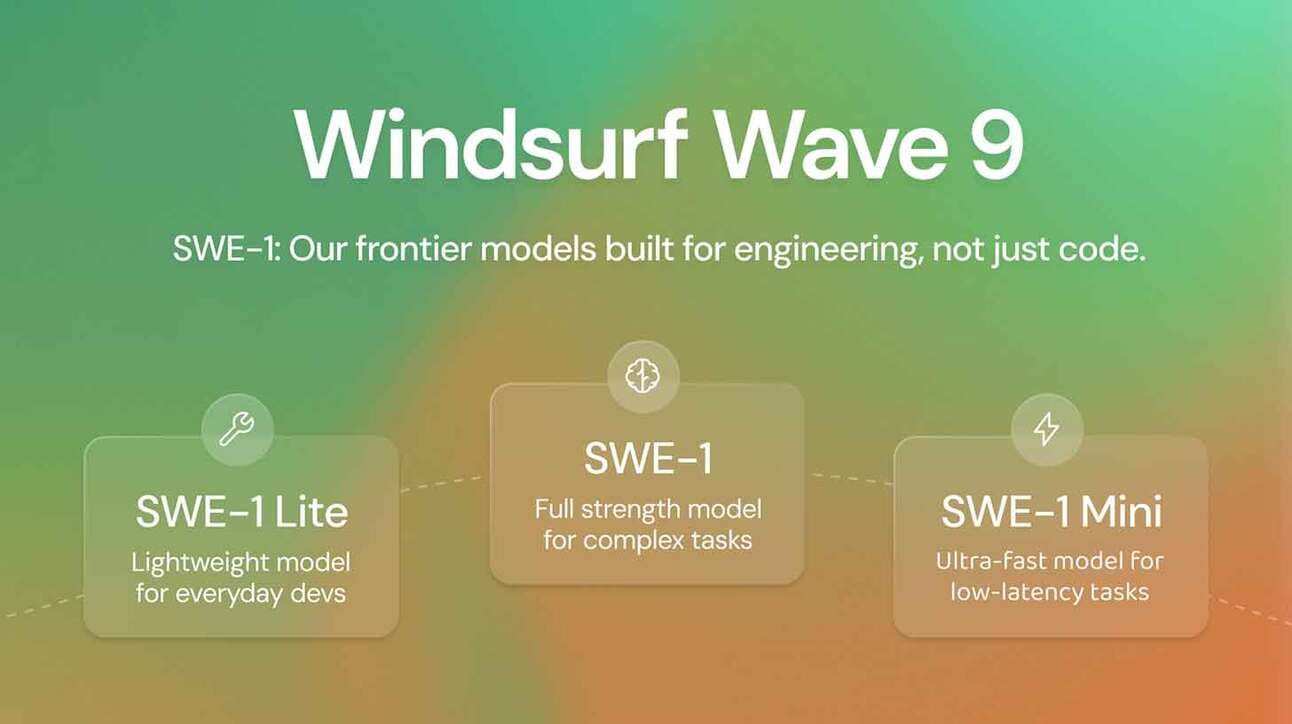

WINDSURF

Image source: Windsurf

The Rundown: AI coding platform Windsurf just launched SWE-1, its first family of in-house AI models specifically designed to assist with the entire software engineering lifecycle — not just code generation.

The details:

The SWE-1 family includes three models: SWE-1 (full-size, for paid users), SWE-1-lite (replacing Cascade Base for all users), and SWE-1-mini.

Internal benchmarks show that SWE-1 outperforms all non-frontier and open weight models, sitting just behind models like Claude 3.7 Sonnet.

Unlike traditional models focused on code generation, Windsurf trained its SWE-1 to handle multiple surfaces, including editors, terminals, and browsers.

The models use a “flow awareness” system that creates a shared timeline between users and AI, allowing seamless handoffs in the development process.

Why it matters: While coding platforms like Windsurf have traditionally been application layers for third-party models, this in-house release is a big transition — and comes (likely strategically) just days after a reported $3B acquisition by OpenAI. With this impressive launch, there may be more to the deal than we initially thought.

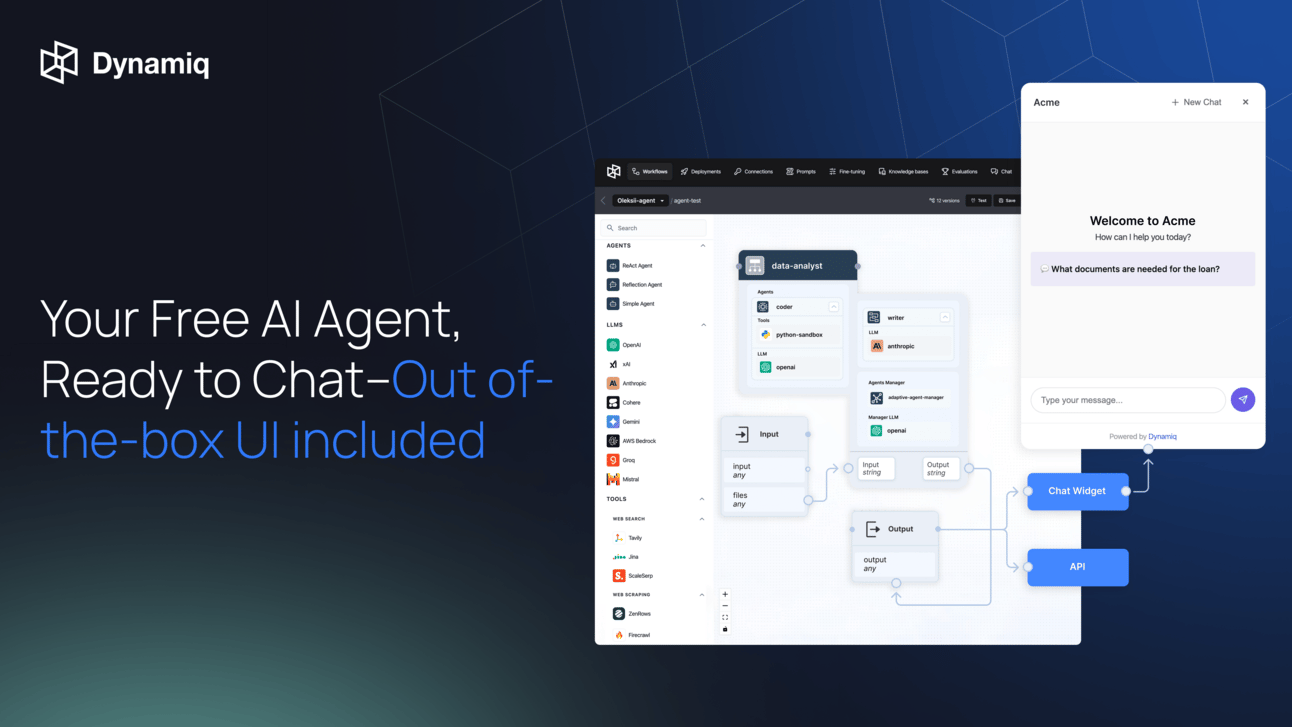

TOGETHER WITH DYNAMIQ

The Rundown: Dynamiq gets your AI agents into production within minutes with a pre-built web chat widget, helping you skip the lengthy setup process and go live fast.

Dynamiq’s developer-friendly platform lets you:

Build, test, deploy, and evaluate your first agent for free with no credit card needed

Connect your agent to an internal knowledge base from Google Drive, Dropbox, or other sources

Test and deploy instantly by copy-pasting a web-widget code snippet

POE

Image source: Poe

The Rundown: AI platform Poe released its Spring 2025 Model Usage Trends report, revealing shifts in AI user preferences across text, reasoning, image, and video — with newer models quickly gaining traction while established players’ experience declines.

The details:

GPT-4.1 and Gemini 2.5 Pro captured 10% and 5% of message share within weeks of launch, while Claude saw a 10% decline in the same period.

Reasoning models surged from just 2% to 10% of all text messages since January, with Gemini 2.5 Pro making up nearly a third of the subcategory.

Image generation saw GPT-image-1 gain 17% usage, challenging leaders Black Forest Labs’ FLUX and Google’s Imagen3 family.

In the video segment, China’s Kling family became a top contender with ~30% usage right after release, while audio saw ElevenLabs’ domination with 80%.

Why it matters: These Poe usage trends offer a valuable real-world look at what models are preferred by users beyond typical benchmarks, while also showing how quickly preferences can shift with new releases. With models accelerating nearly every week, this list may look drastically different in just a few months.

AI TRAINING

The Rundown: In this tutorial, you will learn how to build an automated system that analyzes legal documents uploaded to Google Drive, extracts key information, identifies potential concerns, and sends you a summary email.

Step-by-step:

Visit Zapier Agents, click the plus button, and create a “New Agent”

Configure your agent and set up Google Drive as a trigger for when new documents are added to a dedicated "Legal" folder

Add three tools: Google Drive to retrieve the file, ChatGPT to analyze the document and identify concerning clauses, and Gmail to send yourself a summary email

Test your agent with a sample document and toggle it “On” to activate

Note: Always make sure to double-check AI answers, as the AI agent might hallucinate or contain errors. Also, if you do not want to share sensitive information, you can hide/erase that sensitive data before uploading it to your Google Drive.

PRESENTED BY JACE

The Rundown: Jace AI is an executive assistant that drafts replies, schedules meetings, and manages tasks inside Gmail — helping you reclaim up to 2 hours every day.

This intelligent inbox assistant delivers:

Perfectly drafted replies waiting for you 24/7 in your authentic voice and tone

Customizable draft rules to ensure consistent communication

Smart integration with Slack, Notion, and your calendar for unified workflows

Custom AI labels that auto-organize your inbox to match your needs

Try Jace for free, then use code RUNDOWN for 20% off your first 3 months.

AI RESEARCH

Image source: Microsoft and Salesforce Research

The Rundown: A new study from Microsoft and Salesforce researchers found that LLMs significantly underperform during multi-turn conversations where user instructions are gradually revealed, often getting “lost” and failing to recover.

The details:

Researchers tested 15 leading LLMs, including Claude 3.7 Sonnet, GPT-4.1, and Gemini 2.5 Pro, across six different generation tasks.

The study found that models achieved 90% success in single-turn settings, but fell to approximately 60% when the conversation lasted multiple turns.

Models tend to "get lost" by jumping to conclusions, trying solutions before gathering necessary info, and building on initial (often incorrect) responses.

Neither temperature changes nor reasoning models improved consistency in the multi-turn tests, with even top LLMs experiencing massive volatility.

Why it matters: This research exposes a major gap between how LLMs are typically evaluated versus how they're often used, showing that developers may need to put more of an emphasis on prioritizing reliability and context window management in back-and-forth conversations instead of one-and-done prompts.

QUICK HITS

🤖 xGen Small - Salesforce’s enterprise-ready compact LM

🧮 AlphaEvolve - AI coding agent making math and algorithmic discoveries

🎶 Stable Audio Open Small - Text-to-audio model for music samples

🧠 Psyche - Nous Research’s open, decentralized AI infrastructure

🧑💼 Captions - Account Executive

📝 Harvey - Content Marketing Manager

🧠 Celestial AI - Package Layout Engineer

🤝 Grammarly - Lead Customer Success Manager

You.com announced that its ARI advanced research platform outperforms OpenAI’s Deep Research with a 76% win rate, also releasing new enterprise features.

Meta is reportedly pushing back the projected June launch timeline for its Llama Behemoth model to the Fall due to a lack of significant improvement.

OpenAI launched its "OpenAI to Z Challenge," inviting participants to use its models to help uncover archaeological sites in the Amazon rainforest for a $250k prize.

Salesforce is acquiring AI agent startup Convergence AI, with plans to integrate the team and tech into its Agentforce platform.

Intelligent Internet released II-Medical-9B, a small medical-focused model with performance comparable to GPT 4.5 while running locally with no inference cost.

Manus AI introduced image generation, allowing the agentic AI to accomplish visual tasks with step-by-step planning.

COMMUNITY

Join our next workshop today at 4 PM EST with Dr. Alvaro Cintas, The Rundown’s AI professor. By the end of the workshop, you’ll confidently understand how to design, build, and deploy your own AI systems using OpenAI’s Agents SDK.

RSVP here. Not a member? Join The Rundown University on a 14-day free trial.

That's it for today!

See you soon,

Rowan, Joey, Zach, Alvaro, and Jason—The Rundown’s editorial team