Good morning, {{ first_name | AI enthusiasts }}. AI ‘godmother’ Dr. Fei-Fei Li just teased the next big leap in AI — spatially intelligent systems that could grasp the physics of the real world.

These systems could mark some big breakthroughs, but the question is: are we ready to take AI from understanding language to understanding the intricate details of the world around us?

P.S. We’re hiring a copywriter to test new AI tools and create educational materials that help millions understand and leverage AI. Apply here.

In today’s AI rundown:

AI ‘godmother’ advocates for spatial intelligence

Anthropic’s big cost advantage over OpenAI

Turn spreadsheet data into insights with Copilot

GPT-5 cracks a full 9x9 Sudoku puzzle

4 new AI tools, community workflows, and more

LATEST DEVELOPMENTS

WORLD LABS

Image source: Reve / The Rundown

The Rundown: Famed AI specialist Dr. Fei-Fei Li just published a new essay detailing why the next breakthrough in AI will come from spatial intelligence, or systems that can understand, reason about, and generate 3D, physics-consistent worlds.

The details:

Li argues that while LLMs have mastered abstract knowledge, they lack the ability to perceive and act in space (things like estimating distance and motion).

She said spatial understanding is the cognitive core of human intelligence and a crucial step to take AI from language to perception and action.

World models, Li said, will be key to building this intelligence, but they need the ability to create realistic 3D worlds, understand inputs like images and actions, and predict how those worlds change over time.

She added that these models will ultimately unlock new advances in robotics, science, healthcare, and design by enabling AI to reason in the real world.

Why it matters: World models that understand how objects move and interact could one day predict molecular reactions, model climate systems, or test materials. The challenge lies in teaching AI real-world physics, but momentum is building fast with Li’s World Labs, Google, and Tencent all racing to bring spatially intelligent systems to life.

TOGETHER WITH LOVART

The Rundown: Lovart is the AI design agent built for visual collaboration, with 3M+ users turning prompts into brand-ready visuals, videos, and decks all in one place. Its new Fast Mode makes creation up to 80% faster, while multi-model blending (Sora 2, Veo 3.1, Nano Banana) lets users mix motion, sound, and visuals seamlessly.

With Lovart, you can:

Turn ideas into ready-to-use assets in seconds

Skip design back-and-forths with an AI that gets your brand

Blend leading models for cinematic, ad-ready results

Try Lovart now and see why the platform just passed $30M ARR.

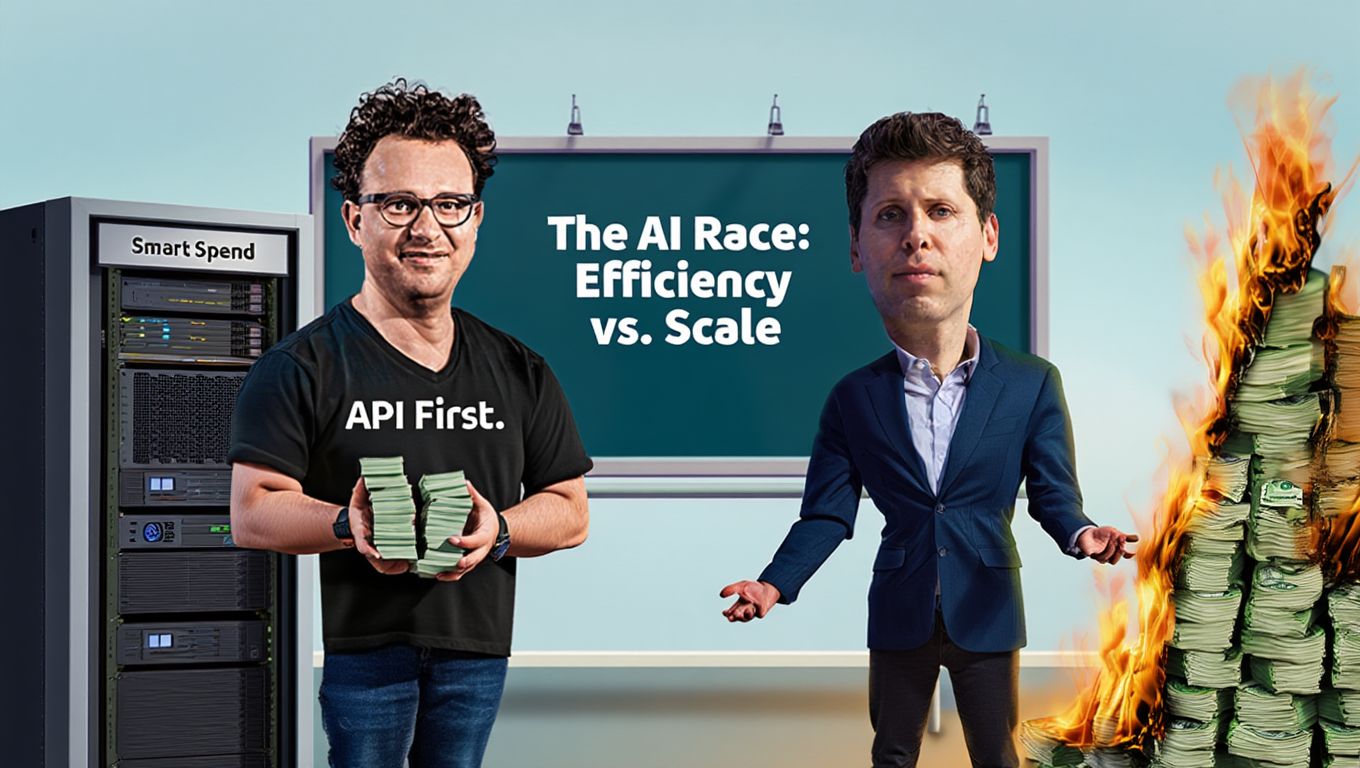

ANTHROPIC

Image source: Reve / The Rundown

The Rundown: Anthropic reportedly projects a major cost advantage over OpenAI — expecting to spend far less on compute for training and running its AI models over the next few years, according to The Information.

The details:

Anthropic estimates $6B in compute costs for 2025 versus OpenAI’s $15B, rising to $27B by 2028, compared to OpenAI’s $111B.

The savings are expected from the company’s use of chips from Amazon, Nvidia, and Google for specialized tasks, unlike OAI’s heavy reliance on Nvidia.

The news comes after Anthropic raised its revenue estimates, saying it expects to be cash flow positive by 2027 and generate $70B in revenue by 2028.

OpenAI, on the other hand, expects to hit $100B revenue mark in 2028 but won’t likely be cash flow positive by 2030.

Why it matters: Anthropic is taking a quieter, more disciplined path, building AI through efficiency and enterprise focus (its 80% revenue is from API). OpenAI, meanwhile, is chasing breadth with a product-heavy push across ChatGPT, research, Atlas, and more. How these choices play out will shape the next phase of AI.

AI TRAINING

The Rundown: In this tutorial, you will learn how to use Microsoft Copilot Desktop's Voice and Vision features to analyze Google Sheets or Excel data hands-free, asking questions aloud and getting instant insights without typing formulas.

Step-by-step:

Install Microsoft Copilot from Microsoft Store (Windows) or App Store (macOS 14.0+/M1 chip), open the app, and sign in with your Microsoft account

Go to Settings via profile icon, toggle on “Voice Mode” and “Copilot Vision,” then open your Google Sheets/Excel file in the browser

Say “Hey Copilot,” click the specs icon (eyeglasses) on the toolbar to enable Vision mode — Copilot scans and confirms it sees your data

Ask analysis questions: “What’s the most revenue-generating product?” or “Calculate total revenue” - Copilot highlights cells and explains calculations

Close the toolbar, then prompt: “Draft a professional analysis report with Executive Summary, Top Performers table, and Key Insights”

Pro tip: Use this workflow for learning new skills, reading technical documents, or studying articles.

PRESENTED BY WARP

The Rundown: Warp fuses the terminal and IDE into one place, with AI agents built in. Edit files, review diffs, and ship code, all without leaving the platform that is trusted by over 600k developers and ranks ahead of Claude Code and Gemini CLI on Terminal-Bench.

Ask Warp agents to:

Debug your Docker build errors

Summarize user logs from the last 24 hours

Onboard you to a new part of your codebase

Download Warp for free and get bonus credits for your first week.

SAKANA AI

Image source: Sakana AI

The Rundown: GPT-5 just became the first AI model to solve a full 9x9 Sudoku puzzle, according to Sakana AI’s Sudoku-Bench, a benchmark designed to test deep reasoning, spatial logic, and creativity.

The details:

Launched in May, Sudoku-Bench tests LLMs on classic and modern Sudoku variants that combine multiple rule sets and demand long, multi-step reasoning.

No model had previously solved a full 9x9 puzzle until GPT-5 cracked it, showing better spatial and logical reasoning than its predecessors.

GPT-5 also achieved a 33% solve rate across puzzles — roughly double the previous leader, marking a major step forward in benchmark performance.

67% of the puzzles remain unsolved, as models struggle with meta-reasoning (learning novel rules) and creative “break-in,” which humans use naturally.

Why it matters: GPT-5’s Sudoku breakthrough shows real progress in structured reasoning, but also how far AI still is from thinking like humans do. Closing that gap will require models that can combine mathematical logic, spatial awareness, and creative insight, essentially the same blend of skills we use to reason through the unknown.

QUICK HITS

Time magazine launched an AI agent to let users query and generate text and audio briefs from its 102-year-old archive.

OpenAI is offering one year of ChatGPT Plus for free to U.S. servicemembers and veterans who retired/separated from active duty within the last 12 months.

Intel’s CTO and AI chief, Sachin Katti, departed for OpenAI, prompting CEO Lip-Bu Tan to assume oversight of the chipmaker’s AI and advanced technology divisions.

Legal AI company Clio, which provides tools to manage cases, research, and workflows, raised $500M in Series G funding at a $5B valuation.

Gamma, the platform for creating AI-generated presentations, websites, and social media posts, surpassed $100M ARR and announced a $68M raise at a $2.1B valuation.

COMMUNITY

Every newsletter, we showcase how a reader is using AI to work smarter, save time, or make life easier.

Today’s workflow comes from reader Diego V. in Berlin, Germany:

“As a project manager, I have to run a lot of meetings, with their respective meeting notes. I created an agent in Copilot that automatically turns on the meeting’s transcription. At the end of each event, it creates a new meeting notes page in my Loop workspace, populating the transcription content, and applying a custom template with discussion points, action items + owners, and links to any referred documentation.”

How do you use AI? Tell us here.

Read our last AI newsletter: OpenAI calls for superintelligence safety

Read our last Tech newsletter: This startup wants to edit embryos

Read our last Robotics newsletter: Apple’s $133B humanoid moonshot

Today’s AI tool guide: Turn spreadsheet data into insights with Copilot

RSVP to next workshop @ 4PM EST Friday: AI Essentials for Leaders

That's it for today!

See you soon,

Rowan, Joey, Zach, Shubham, and Jennifer — the humans behind The Rundown