Sign Up | Advertise | Tools | View Online

Happy Monday!

Stability AI has just released two new open-source Large Language Models.

The verdict: It beats GPT-4 in several aspects. Let’s dig in.

In today’s AI rundown:

🤖 Stability AI releases 2 new open-source LLMs

🔒 OpenAI’s head of trust and safety steps down

🎧 Researchers can now turn your thoughts into music

🛠️ 6 New AI tools and 3 quick hits

Read time: 3 minutes

LATEST DEVELOPMENTS

STABILITY AI

Image source: Stability AI

The Rundown: Stability AI has released FreeWilly1 and FreeWilly2, two open-source Large Language Models developed by its own CarperAI lab.

Details:

Both models excel in reasoning and understanding linguistic subtleties, validated through various benchmarks.

The data generation process was inspired by Microsoft's methodology, using high-quality instructions from specific datasets.

FreeWilly models demonstrate exceptional performance despite being trained on a smaller dataset compared to previous models.

FreeWilly2 beats GPT-4 in some areas and GPT-3 in most.

Why it matters: With Llama and now FreeWilly, the open-source community is on a big win streak. As competition brews, there’s no doubt we’ll be getting faster developments in the AI space.

TOGETHER WITH COMPOSER

The Rundown: Wall Street Legend Jim Simons has generated 66% returns annually for 30 years. His secret? Algorithmic trading.

Details:

Using Composer's AI tool, you can create algorithmic trading strategies that automatically trade for you (no coding required).

Build strategies using AI and a no-code editor.

Use our free database of 1,000+ community-built strategies.

Start trading with the click of a button.

Composer's got big shots on board, with over $1 billion in trading volume.

OPENAI

The Rundown: Dave Willner, OpenAI's former head of trust and safety, has announced his departure and transition to an advisory role.

Key points:

Dave’s departure raises questions about leadership in addressing trust and safety concerns in the field of generative AI.

OpenAI is now actively seeking a replacement for Willner to manage trust and safety.

The industry faces increasing challenges in ensuring safe and responsible use of AI technology.

Why it matters: The safety of AI has been the topic of conversation, with some calling it more dangerous than nuclear weapons. The departure of the head of trust and safety from the world’s leading AI company is a big deal.

GOOGLE RESEARCH

Image source: Google Research

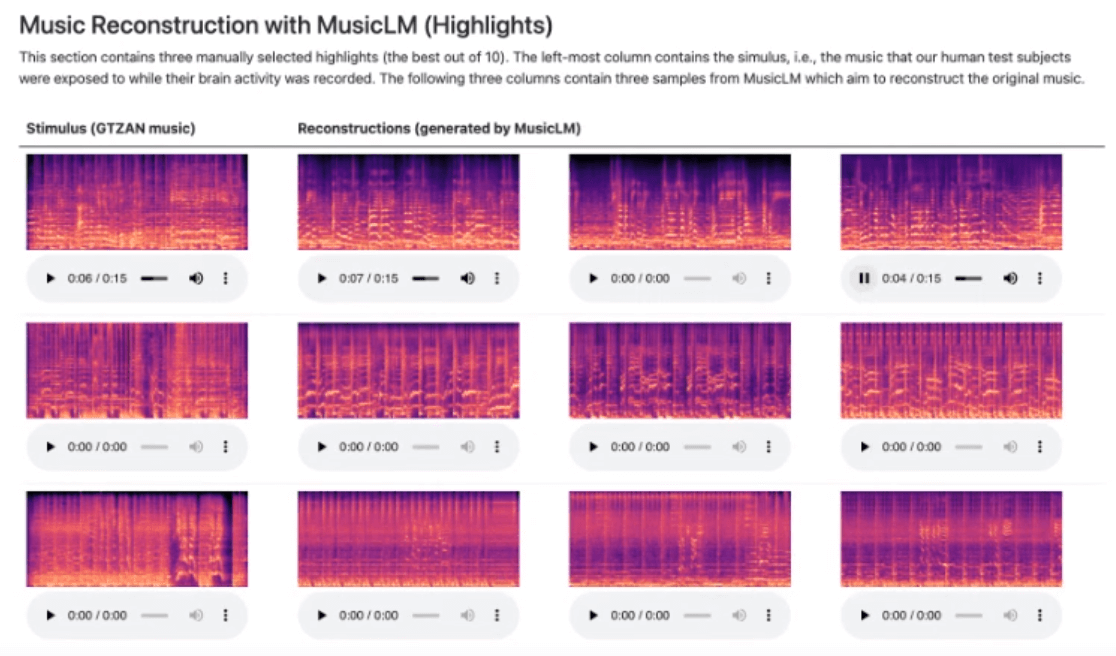

The Rundown: Researchers at Google found a method called “Brain2Music” to reconstruct music from human brain activity.

Details:

The approach involves using either music retrieval or a music generation model called MusicLM conditioned on embeddings derived from fMRI data.

The generated music closely resembles the musical stimuli experienced, including semantic properties like genre, instrumentation, and mood.

The study investigates the relationship between different components of MusicLM and brain activity through voxel-wise encoding modeling analysis.

Why it matters: We’re headed into a world where music is not just heard but also seen through brain patterns. The future is going to be amazing.

TRENDING TOOLS

💻 Circleback uses AI to help you get more out of your meetings by creating notes and action items for you*

📷 InvokeAI helps you generate better results from Stable Diffusion

✏️ Frontitude writes better UX copy for you

📝 BetterLegal Assistant answers any question you have based on your legal documents

🔎 AI Reverse Image Search finds similar image based on your source image

🗞️ SumUp summarizes any article and gives relevant references

🔮 Supertools organizes 100’s of AI tools for you in one spot

QUICK HITS

According to the WSJ, Google co-founder Sergey Brin is “back in the development trenches,“ working alongside AI researchers at the Google headquarters, assisting his efforts in building Google’s powerful Gemini system.

Australian researchers just secured a $600k bag to explore the melding of human brain cells with AI. The researchers aim to grow brain cells in a lab dish and, according to the researchers, allow machine intelligence to “learn throughout its lifetime.“

Google joins other leading AI companies to jointly commit to fostering responsible AI development, supporting international efforts to harness AI's benefits and curb its risks. Google has made it clear they are pushing for collective action to navigate the challenges of misinformation and bias in AI.

SPONSOR US

🦾 Get your product in front of over 250k+ AI enthusiasts

Our newsletter is read by thousands of tech professionals, investors, engineers, managers, and business owners around the world. Learn more or get in touch today.

* This is sponsored content

THAT’S A WRAP

Share us with your friends, and get free resources!

You currently have {{ rp_num_referrals }} referrals, only {{ rp_num_referrals_until_next_milestone }} away from receiving {{ rp_next_milestone_name }}.

View all our rewards here.

Copy and paste your personal link to share with others: {{ rp_refer_url }}

If you have anything interesting to share, please reach out to us by sending us a DM on Twitter: @rowancheung & @therundownai

How was today's newsletter?

❤️ Rundown review of the day